For years, we’ve interacted with AI primarily through text. We typed queries into Google, chatted with bots, and read AI-generated articles. But the world around us isn’t just text; it’s a rich tapestry of sights, sounds, and movement. Now, Artificial Intelligence is finally catching up, ushering in the era of Multimodality.

Think of the latest AI models like Google’s Gemini and OpenAI’s GPT-4o as having a whole new set of senses. They can process and generate information across various formats – text, images, audio, and even video – all at the same time. This isn’t just an upgrade; it’s a fundamental transformation that’s poised to revolutionize how we live, learn, and work right here in India.

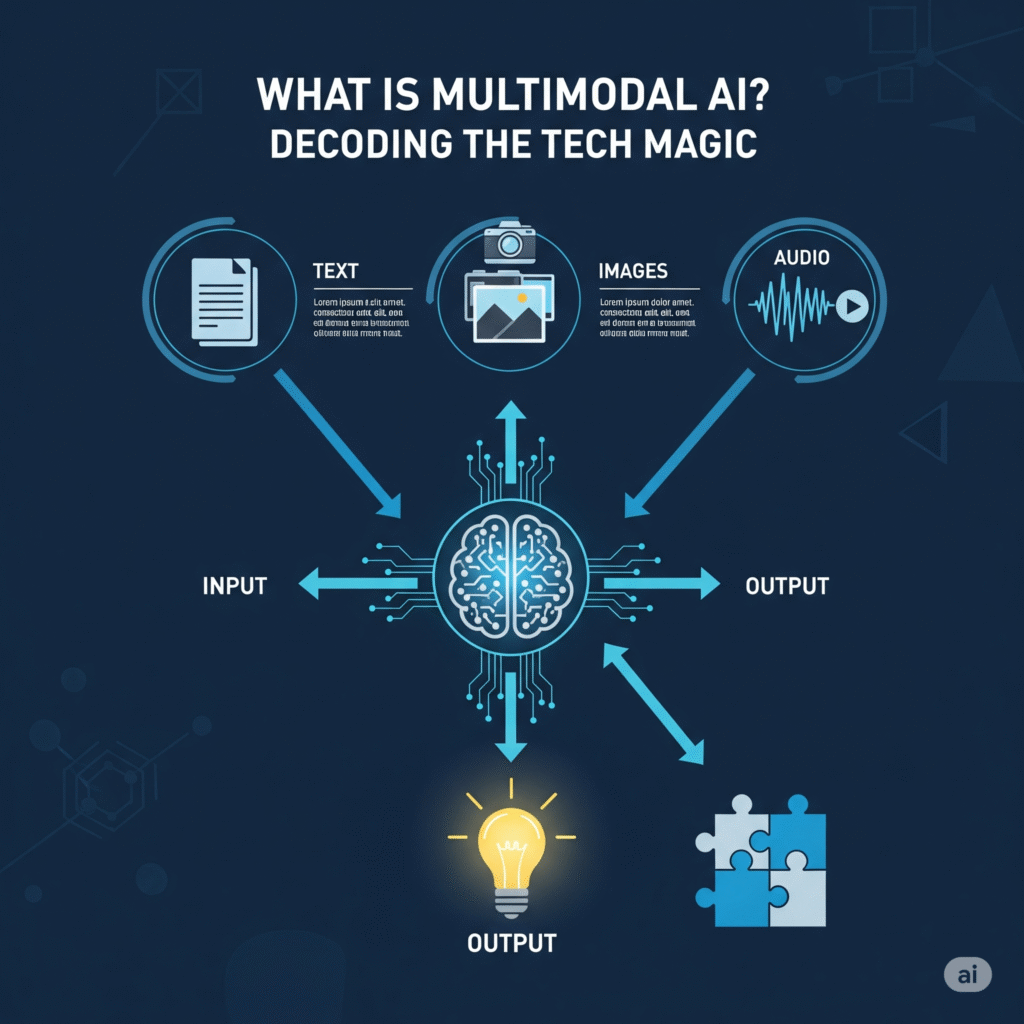

What is Multimodal AI? Decoding the Tech Magic

In simple terms, multimodal AI means that an AI system can understand and generate content using multiple types of data. Imagine a human being: we don’t just process words; we see the expressions on faces, hear the tone of voice, and understand the context of our surroundings. Multimodal AI aims to replicate this holistic understanding.

Previously, AI models were often specialized. One model might be great at understanding text (Natural Language Processing), another at recognizing images (Computer Vision), and yet another at processing audio (Speech Recognition). Multimodal AI brings these capabilities together into a single, unified model.

Jaw-Dropping Demos That Showcase the Power:

- Real-time Video Analysis: Imagine pointing your phone camera at a complex machine part. A multimodal AI could instantly identify the components, diagnose a potential issue based on the visual and auditory cues (like a strange sound), and provide step-by-step troubleshooting instructions in Hindi through spoken audio and on-screen text. This has huge implications for manufacturing and maintenance industries across India.

- Spoken Conversations with True Understanding: Forget rigid chatbot scripts. With multimodal AI, you can have a natural, spoken conversation. The AI can understand not just your words but also your tone of voice (detecting frustration, for instance), react to images you might show it (e.g., “What dish can I make with these vegetables?”), and respond with relevant information, even generating a recipe with accompanying images. Think about the possibilities for more intuitive customer support in regional Indian languages.

- Intelligent Image and Audio Editing: Imagine describing a photo (“Remove the person in the red saree and add a background of the Taj Mahal at sunset”). A multimodal AI can understand the visual elements and the textual instructions to perform complex edits seamlessly. Similarly, you could describe a sound (“Remove the background noise and enhance the speaker’s voice”) and get a clean audio file. This will empower content creators and businesses in India to produce high-quality multimedia effortlessly.

Revolutionizing Industries Across India

The ability of AI to see, hear, and speak simultaneously opens up a world of possibilities for various sectors in India:

- Education: Imagine interactive learning experiences where students can ask questions verbally, show images of problems, and receive multi-sensory explanations. AI tutors could adapt to a student’s learning style by understanding their engagement through facial expressions and speech patterns. This can bridge the gap in access to quality education across diverse regions.

- Customer Support: Say goodbye to frustrating text-based support. Multimodal AI can enable video-based help where customers can show the issue they are facing in real-time. The AI can guide them visually and audibly in their preferred language, leading to faster and more effective resolutions, especially for complex technical issues.

- Content Creation: From generating marketing videos with AI narrators and relevant visuals from a simple text prompt to creating personalized social media content that adapts to trending audio and visual formats, multimodal AI will democratize content creation for individuals and businesses alike. Think about small businesses in Tier-2 and Tier-3 cities being able to easily create compelling video ads in their local languages.

- Accessibility: Multimodal AI can break down communication barriers for people with disabilities. For example, AI can translate sign language into spoken and written text in real-time or describe visual content to visually impaired individuals through audio.

Frequently Asked Questions (FAQ)

Q1: How is multimodal AI different from just using separate AI models for text, images, and audio?

The key difference is integration and understanding across modalities. Separate models process each type of data in isolation. Multimodal AI models process these different types of data together, allowing them to understand the relationships and context between them, leading to a much richer and more human-like understanding.

Q2: Are these advanced multimodal AI models available to the average person in India?

While the most cutting-edge models like Gemini Ultra and GPT-4o might have some access restrictions or costs associated with their full capabilities, many of their features and smaller, more accessible versions are becoming increasingly available through various platforms and APIs. We’ll see more integration into everyday apps and services in the near future.

Q3: What are the ethical considerations of multimodal AI?

As with any powerful technology, there are ethical concerns. These include the potential for misuse in creating deepfakes or spreading misinformation through realistic AI-generated videos and audio. Robust safeguards, ethical guidelines, and media literacy initiatives will be crucial to mitigate these risks.

Q4: Will multimodal AI understand all Indian languages?

While current models are strongest with widely spoken languages like Hindi and English, there is significant ongoing research and development to expand the language capabilities of multimodal AI to include more regional Indian languages. This will be crucial for its widespread adoption and impact across India’s diverse linguistic landscape.

Q5: How can businesses in India start preparing for the age of multimodal AI?

Businesses should start by exploring the potential applications of multimodal AI in their specific industry. This could involve brainstorming new customer experiences, streamlining internal processes, or enhancing marketing efforts. Keeping an eye on the evolving AI landscape and investing in training and experimentation will be key to staying ahead of the curve.

It’s amazing how AI is moving beyond just reading and writing. With its ability to process audio and visuals too, it’s almost like AI is evolving to perceive the world the way we do. I’m especially excited about its potential impact in India, where diverse languages and cultures could benefit from more contextual understanding.